-

Written by Christopher Van Mossevelde

Head of Content at Funnel, Chris has 20+ years of experience in marketing and communications.

Breaking news! Large language models and other artificial intelligence tools may have the power to reshape marketing as we know it.

Okay. That’s not breaking news at all. For the past year, marketers have been inundated by story after story about how ChatGPT will make every copywriter irrelevant or how image generators like MidJourney can make everyone a creative director overnight.

But let’s pump the breaks a little bit. Anyone who has spent any time with these tools can tell you that, while they can seem impressive, they cannot replace a seasoned professional. Nor can they be a substitute for human creativity. Not yet, anyway.

Still, are we totally sure that they can’t replace a couple of human creatives? Well, to attain the definitive answer in 2023, our very own content team decided to run a spirited competition / “highly scientific experiment” (see: not scientific at all). We pitted humans against machines to see who could make the better ad.

So how did we don our very fake lab coats to design and run this competition?

The premise

As modern digital marketers, we use all sorts of digital tools to optimize our ad spend, targeting, and distribution every day. That includes making full use of the advanced algorithms used by platforms like Google Ads and Meta.

We weren’t trying to compete with those AI tools. Instead, we were focused on how generative AI could stack up against its human counterparts. We decided that we needed two teams. The first team would be humans making ads from scratch, using nothing but brain power and hard-earned experience. This was Team Human.

The second team would also be made up of humans, but with a catch. This team would use generative AI to write and design the ad. We called this group Team AI.

See? Very scientific.

Each team would be tasked with creating 2 ad creatives. All ads would be run through Meta’s A/B testing program. Then, the winning ad from each team would battle it out over another week for all the bragging rights.

Trying to close up any loopholes

As we pitched the idea internally, the team started to notice a few loopholes in the initial premise. First, we know from a Boston Consulting Group study that the combination of humans and AI can unlock huge boosts in performance. That may be a bit unfair for Team Human.

To even the playing field, we mandated that Team AI couldn’t manipulate the copy or visuals themselves. They could only adjust and improve their prompts. However, they had free reign to pick whatever generative AI tools they wanted to use.

Then, our creative team pointed out that they may be able to exploit another loophole: shower ideas. You know how creatives are. They can seem very mysterious, but very often, their best ideas come when they aren’t thinking about work. The best ideas are those that come on the commute home, in the middle of the night, in the shower, and more.

To prevent them from taking advantage of these off-time creative superpowers that the AI team didn’t have, we opted to condense the creation phase to a single block of about an hour or so. Again, very scientific here.

Time for a rules recap

With that, the stage was set. Our copywriter and graphic designer would use good-ole human ingenuity against our social media manager and growth manager, who had the latest AI at their fingertips.

All ads would be run for the same period of two weeks across Facebook and Instagram to see who would emerge victorious. Then, we would select the best performing ads from each team to go head-to-head alongside our normal ads.

So, that’s four ads total, running for two weeks. Then, two ads ran for another week. Got it so far? Good.

Benchmarks and KPIs for the ads

We were monitoring a few metrics for each ad. We were, of course, a bit interested in overall impressions, but our KPI was conversions. That meant the ads had to drive a user to a landing page where said user would register their interest in using Funnel. If an ad was great at sending traffic to our website, but no one registered interest, it was not a good ad. After all, we mean business.

We also kept an eye on cost per click, click-through rate, share of spend, and more.

Notes from the creative sessions

It was incredibly interesting to watch the two teams create and iterate on their ads. They even shared some key experiences from the process.

“I love the condensed three-hour creative session,” said Ric, our creative designer. “It really helps you focus on nailing that idea quickly.”

His partner Sean, our copywriter, also enjoyed the intensive session but missed other elements of a more traditional process.

“We just kind of had to go with our gut instinct on the concept at a certain point,” he said. “We didn’t have the opportunity to bounce the ideas off anyone else who has a different perspective or background than us.”

Team AI wasn’t without its challenges or new learnings either.

“It was really interesting to just dive in and start working with some of these tools,“ said Alex, our growth manager. “It just generates ideas so fast, allowing you to iterate and crack on really quickly.”

Leah, our social media manager, was also impressed at her team’s pace during the creative process.

“It was somewhat shocking to see how quickly and easily we were able to create a couple of ads,” she said. “I mean, we’re not marketing novices, but we were able to create two entire ad creatives in a snap. No copywriting and no graphic design required.”

Leah did have a caveat, though.

“Was it the most beautiful ad creative you’ve ever seen? Well, no,” she said. “You would want a bit more finesse and expertise for a big-budget ad campaign, but this could be a total game changer for small and mid-sized businesses with limited resources.”

The winner

Remember, in the first phase of our experiment, all ads were run together in an A/B test. The “A” ad creatives were from Team Human, while the “B” ads were from Team AI. Within the first week of this phase, any difference in performance was negligible. However, during the second week, one of the human-made ads really took off. That ad creative was moved on to the final round.

Meanwhile, Team AI didn’t see this kind of boost in conversion rate, although one of the ads scored on some of our other non-KPI metrics like CTR and CPC. So, the decision was made to move this better-performing creative onward.

Overall all, though, Team Human took round one.

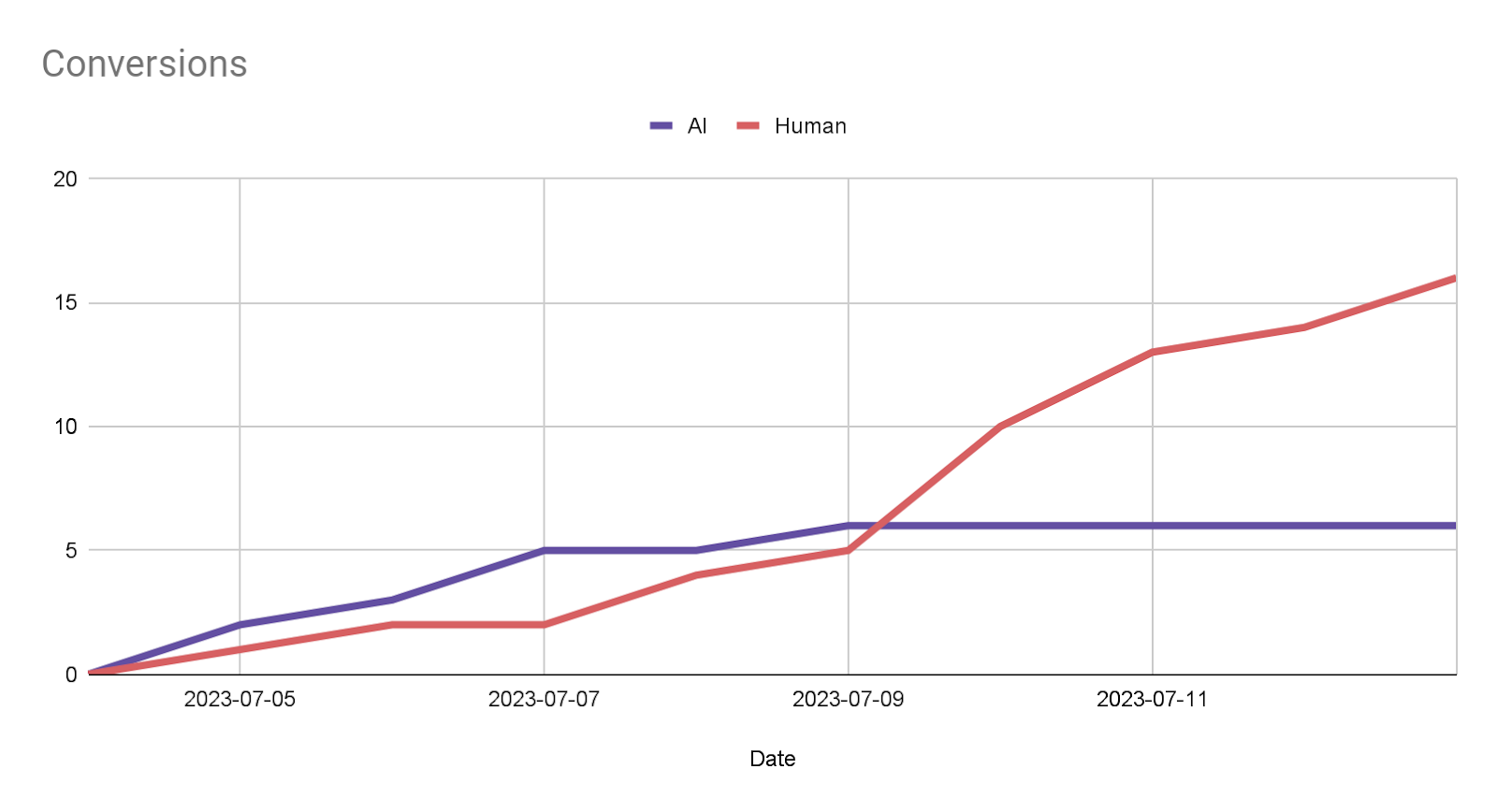

Total leads earned by Human (red) and AI (blue) ads in phase one

We then plugged the two finalist ads back into Meta’s ad platform for the ultimate showdown. At the end of this second phase, we discovered that the algorithm had picked a winner…

Team Human!

That’s right, the super algorithm at the core of Meta’s online ad machine preferred the human-made creative to the AI-made one.

As you can see in the results below, Team Human’s ad won for impressions, click share, and the amount of budget that the algorithm allotted to the creative. The ad won on these fronts, because Meta’s algorithm deemed it the more successful ad.

|

Ad |

% of Impressions |

% of Clicks |

% of Cost |

|

AI |

20.48 |

12.79 |

20.08 |

|

Human |

79.52 |

87.21 |

79.92 |

|

|

|

|

|

|

Ad |

CPC |

CPM |

CTR |

|

AI |

$6.18 |

$13.43 |

0.22% |

|

Human |

$3.61 |

$13.71 |

0.38% |

To be fair, this was a fairly quick experiment, and we aren’t talking about billions of impressions. However, it was interesting to see the growth curve observed by the human-made creative from phase one continued into the championship round.

The “scientific” method

Like we said, this wasn’t the most rigorous experiment with control groups, nor was it some kind of double-blind study. Rather, it was a great excuse to see how these systems worked, how we could utilize them in our own processes, and see how the current form of AI compares to humans who have done this professionally for decades.

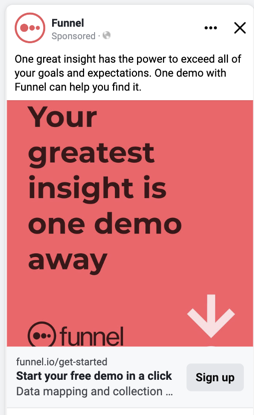

The Team Human ad creative (and winner of the competition)

The Team AI ad creative

What to take away from this competition

The main takeaway from our experiment is that you need to test and try things out. The same perspective we express when considering marketing mix modeling or advanced data analysis also holds true for generative AI. By messing around with a couple of these tools, we came up with new ideas for how we could automate our different processes.

One other takeaway for all marketing teams: great ads need time to cook. Our teams had a very compressed timeline to conceptualize, write, and design their ads. Human creatives benefit from developing concepts that can tell a rich story, then bounce those ideas off other team members with varied perspectives to make sure it works.

Conversely, your teams will need more than an hour (or less) to learn how best to use these new generative AI tools. And still, those ads should also be reviewed internally to ensure the best message and visual is served to your audience.

-

Written by Christopher Van Mossevelde

Head of Content at Funnel, Chris has 20+ years of experience in marketing and communications.